Challenge

Start of topic | Skip to actions

Participating Team

- Short team name: VisTrails

- Participant names: Erik Anderson, Steven Callahan, Juliana Freire, David Koop, Emanuele Santos, Carlos Scheidegger, Claudio Silva, Nathan Smith and Huy Vo

- Project URL: http://www.sci.utah.edu/~vgc/vistrails

- Project Overview:

- Provenance-specific Overview:

- Relevant Publications:

- Managing Rapidly-Evolving Scientific Workflows (by Juliana Freire, Claudio T. Silva, Steven P. Callahan, Emanuele Santos, Carlos E. Scheidegger and Huy T. Vo) Invited paper, in the proceedings of the International Provenance and Annotation Workshop (IPAW), 2006.

- Using Provenance to Streamline Data Exploration through Visualization (by Steven P. Callahan, Juliana Freire, Emanuele Santos, Carlos E. Scheidegger, Claudio T. Silva and Huy T. Vo) SCI Institute Technical Report, No. UUSCI-2006-016, University of Utah, 2006.

- VisTrails: Enabling Interactive Multiple-View Visualizations (by Louis Bavoil, Steven P. Callahan, Patricia J. Crossno, Juliana Freire, Carlos E. Scheidegger, Claudio T. Silva and Huy T. Vo) In Proceedings of IEEE Visualization, 2005.

Workflow Representation

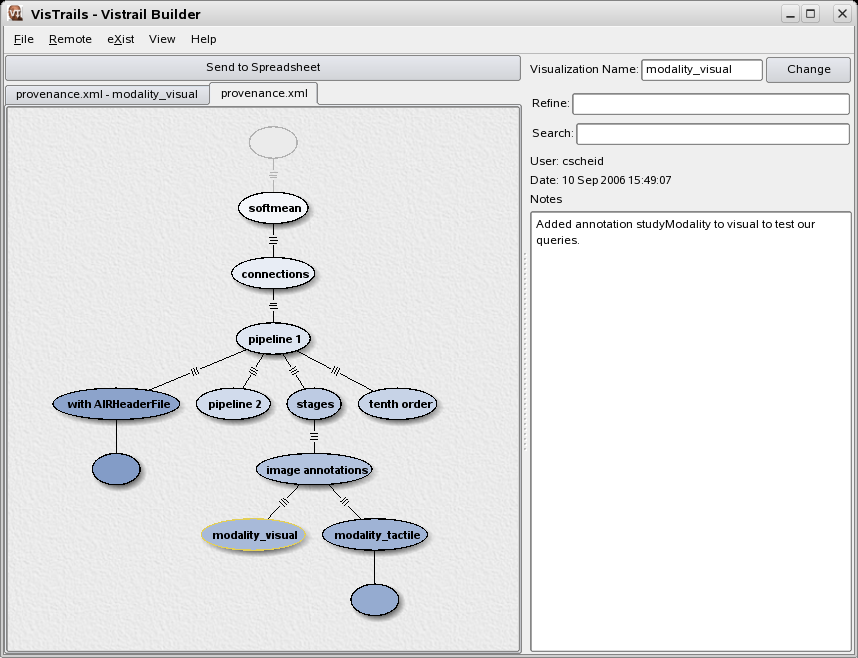

A novel feature of VisTrails is an action-based model to capture provenance. The action-based model captures the history of changes to both parameter values and workflow definitions by unobtrusively tracking all modification a user (or group of users) applied to a set of related workflows in an exploratory task. A vistrail is illustrated in Figure 1. The nodes in a vistrail tree represent workflows, and an edge between two workflows represent the set of changes applied to the parent to obtain the child workflow (e.g., the addition of a module, the modification of a parameter, etc.). A workflow instance corresponding to a vistrail node n can be constructed by replaying all the actions from the root of the tree down to n. In a nutshell, a vistrail represents several versions of a workflow (which differ in their specifications), their relationships, and their instances (which differ in the parameter values used).

Figure 1 – VisTrails maintains information about the evolution of workflows. The nodes in this tree correspond to different workflow versions created for the challenge.

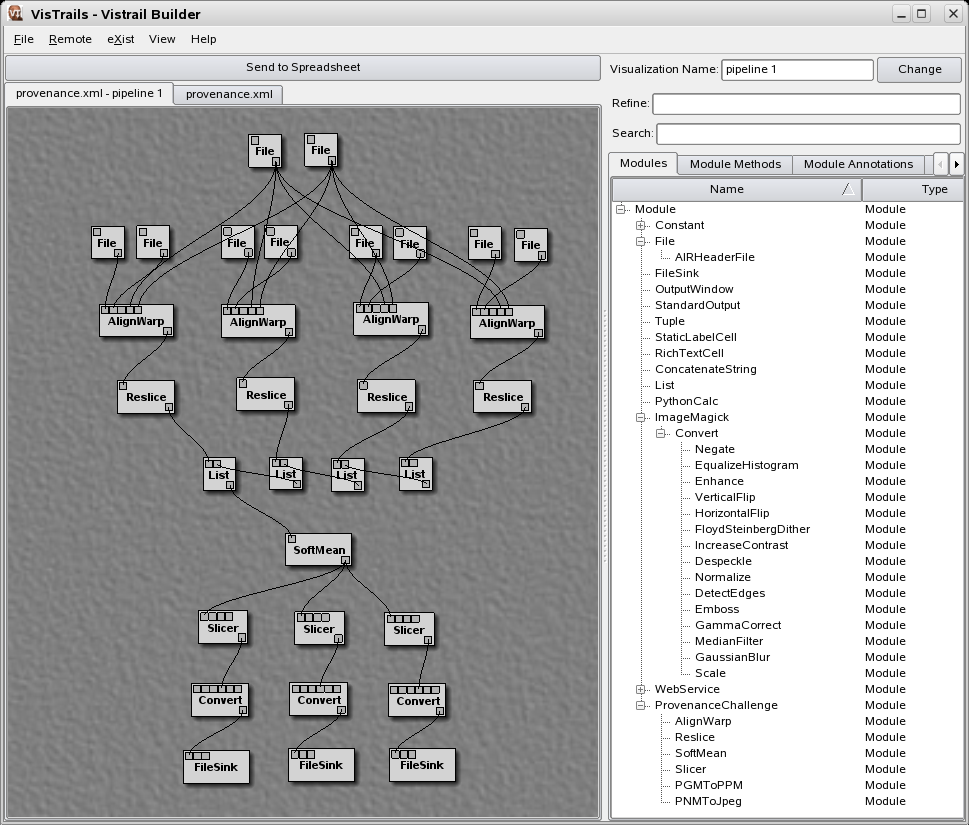

Figure 2 – The VisTrails Builder shows the visual programming interface for the first workflow of the challenge---the node labeled 'pipeline 1' in Figure 1.

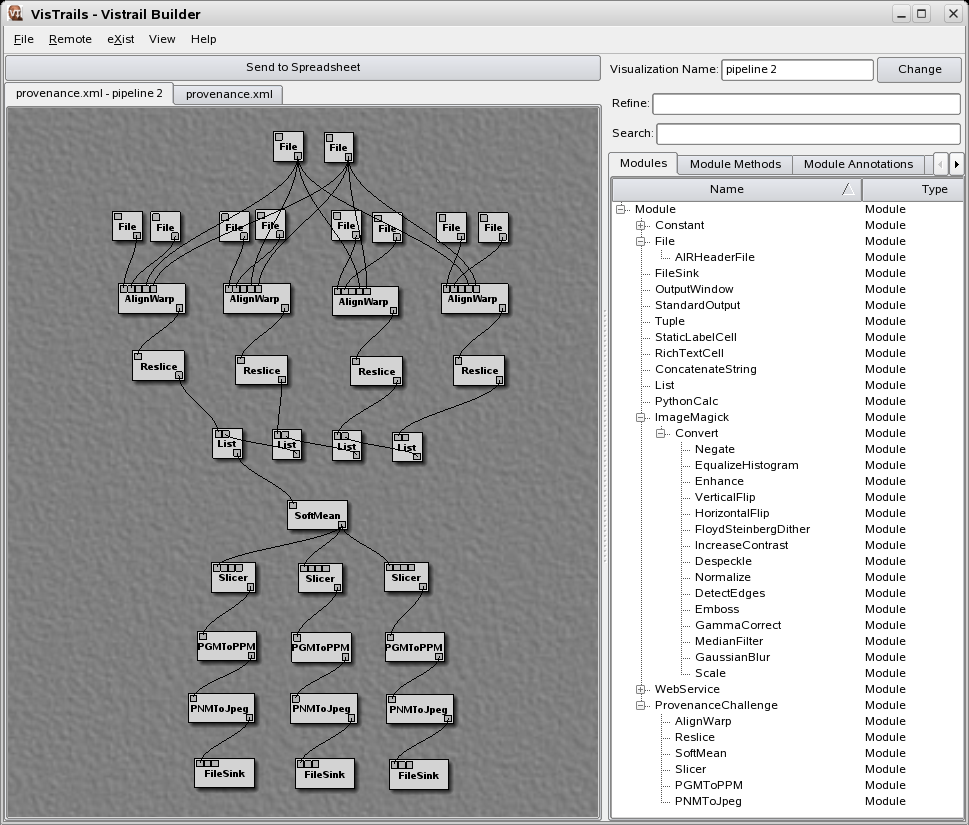

Figure 3 – The VisTrails Builder shows the visual programming interface for the second workflow of the challenge---the node labeled 'pipeline 2' in Figure 1.

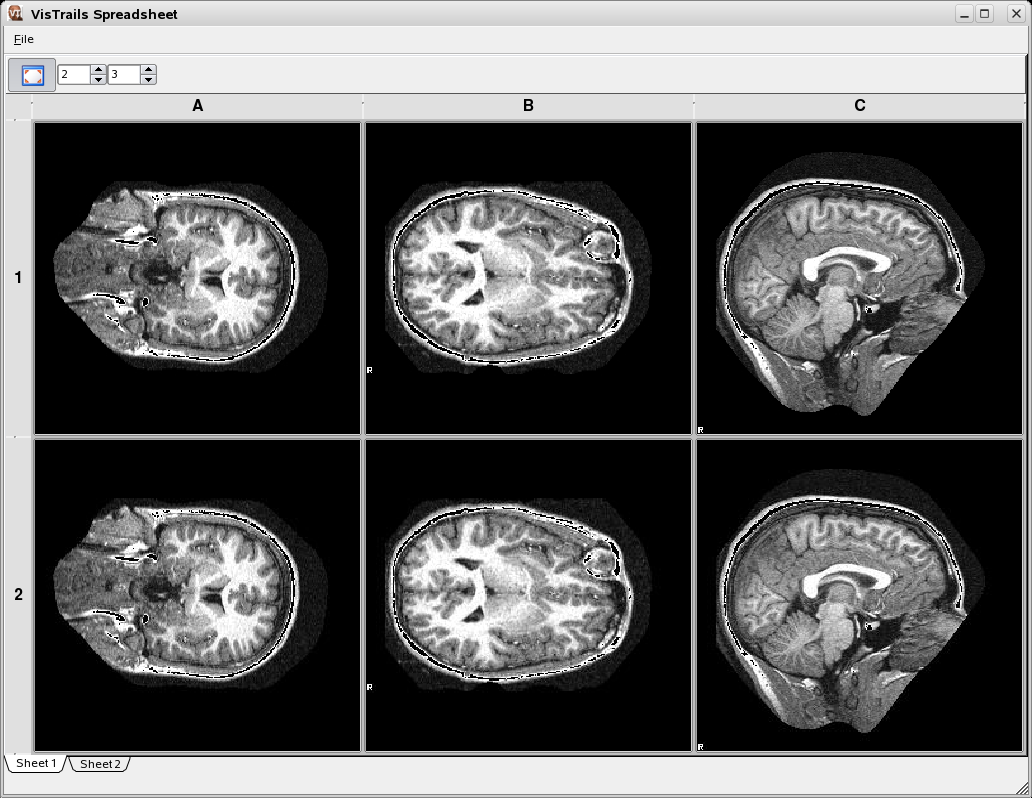

Figure 4 – The VisTrails spreadsheet allows comparative visualization by showing the output of both workflows used in the challenge. The output of the first workflow is shown in the top three images as an x,y, and z slice. The results of second workflow are shown in the bottom three images.

This action-based representation is both simple and compact---it uses substantially less space than the alternative of storing multiple and separate versions of a workflow. In addition, it enables the construction of an intuitive interface that allows scientists to both understand and interact with the history of the workflow through these changes.

The stored provenance ensures reproducibility of the data products, and it also allows scientists to easily navigate through the space of distinct workflows created for a given exploration task. In particular, it gives users the ability to return to previous versions of a workflow and/or different parameter settings and comparatively visualize their results; to undo bad changes; to compare different workflows.

Another important feature of the action-based provenance model is that it enables a series of operations that greatly simplify the exploration process and have the potential to reduce the time to insight. In particular, it allows the flexible re-use of workflows; it provides a scalable mechanism for creating and comparing a large number of data products as well as their corresponding workflows; and it enables collaboration in a distributed setting (see [2] for details).

What we changed

Some of the queries in this challenge required information that was not captured by our provenance mechanism. In particular, since the focus of our project has been on workflow design, we had no mechanism for logging information about workflow execution.The Execution Log

We have added a separate process which logs, in a MySQL database, information about the execution of workflow instances. Currently we have a very simple mechanism and we only store information about the machine on which a workflow (or individual modules) ran; their running time; and as we explain below, any metadata produced in the execution. Each "execution" record is associated with a workflow instance that is represented by the (unique) id of a vistrail node. It is worthy of note that the logging process can be easily replaced with more sophisticated mechanisms---all that is required is the ability to access log records associated with a vistrail node.The VisTrails Query Language

While implementing the challenge queries, and with our goal of providing tools that scientists can use, we came up with a new language for querying the workflow evolution history, individual workflows, and log information in an integrated way. Our goal in the future is to provide a visual query interface that hides the details of the syntax of this language. We have designed a set of vistrails-specific constructs which work for the different metadata layers in our system, including constructs for:- retrieving information from the vistrail structure, i.e., versions of workflows (e.g., all versions derived from a given workflow)

- retrieving information about individual workflows and modules (e.g., all modules located upstream of a given module)

- retrieving information about execution log records

Adding new modules to execute challenge workflows

VisTrails has a plugin mechanism that makes is very easy to add new modules. All that is required is the creation of a simple (python) wrapper for the functions being imported, no changes to the GUI are needed. Here is a brief overview of the plugin mechanism and an example of one of the wrappers we created for the challenge: ModuleWrappersForVisTrails One issue we encountered executing the AIR tools is that they do not provide a 'clean' API. For example, a function call may reference a file with extension .hdr (e.g., junk.hdr) and the function actually opens the a file with the same name but with extension .img (i.e., junk.img). In addition, they also create files that aren't mentioned anywhere in the pipeline. Without low level access to file creation on the operating system, we cannot track these types of execution. Thus, we provide provenance of the workflows and executions that are specified by the API of the tool.The Workflows

By wrapping the tools used in the challenge, we obtain a list of functions and parameters that are available for a user. VisTrails provides a visual programming interface for building workflows based on the functions (which become modules) that are provided by the tools. This Builder facilitates the creation of workflows by allowing the user to add, delete, and connect modules as well as specify parameters. Thus, building the workflows for the challenge was easily done with our point-and-click interface. Figures 2 and 3 show the two workflows we generated with this interfaces for the challenge. We added a few extra features to our VisTrails Builder to extend our querying capabilities. Each node in a vistrail can be annotated with text regarding the creation of the node. In addition, we also allow modules in the Builder to be annotated with key-value pairs. Finally, we display the execution log of each module in a side panel along with parameters and annotations. The combination of annotations and execution log records allows us to answer all the queries because it covers the specification as well as the execution of the workflows.Provenance Trace

As described above, our system deals with three layers of metadata: the workflow evolution---the relationships among the series of workflows created in an exploratory task; individual workflows; and run-time information. The workflows and their relationships are encoded in the vistrail tree (see Figure 1), which we represent with an XML file. The run-time information is saved in a MySQL database. The vistrail which captures the different versions of workflows we created for the challenge is shown in Figure 1; and its XML representation can be found here. A textual representation of the run-time log entries used to answer the challenge queries can be found at: LogRecordsProvenance Queries Matrix

| Teams | Queries | ||||||||||

| Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | |||

| VisTrails team |  |  |  |  |  |  |  |  |  | ||

Provenance Queries

Because one of the main goals of VisTrails is to provide an intuitive user-interface, we answer all of our queries visually. This is done by highlighting the versions and modules in our VisTrails Builder that match the query. The versions, modules, and parameters can then be explored interactively by the user. Currently, the queries for versions and modules are performed through a Search box and queries that involve finding the difference between workflow versions can be performed by dragging-and-dropping one node in the vistrail onto another. The structure of VisTrails plays an important role in the design of our query language, and as outlined earlier, the language is broken into pieces that relate to (a) versions, (b) workflows, and (c) executions. Note that a vistrail consists of a tree of workflows, and each individual workflow may be associated with many executions. In order to tie these different pieces of information, we use relational algebra operations. The VisTrails query language is being designed to balance generality with simplicity, and breaking the queries down into the elements they relate to helps clarify both what is being asked and how the query is being executed. Specific constructs have been added to deal with the structure of a vistrail and a workflow, and these functions simplify the queries. Finally, while the language is defined to allow specific bits of information to be returned, we represent our results visually: we display matching versions, modules, and executions in the context of the relevant data structure. We begin by defining the three levels of the query and relevant functions.- vt: specifies criteria for versions. At this level, we can ask for versions created by a given user at a given time, or search for versions that incorporate specific pipelines.

- wf: specifies criteria for workflows. At this level, we can ask for modules with a given function or annotation, or search for workflows that use certain input files.

- log: specifies criteria for executions. At this level, we can ask for executions that occurred on a Monday or whose running time was greater than an hour.

- upstream(x) takes a module or set of modules and returns all modules that precede the given module(s) in the workflow. Note that upstream does not include the argument module itself.

- inputs(x) takes a module or set of modules and returns all modules immediately precede the given module(s) in the workflow.

- executed(x) takes a module or set of modules and returns true if the module(s) have been executed (there is an entry in the execution log) or false otherwise.

Version attributes:

- annotation(key): gives the value of an annotation for the given key

- user: gives the user who saved the version

Module attributes:

- annotation(key): gives the value of an annotation for the given key

- name: gives the type of module

- parameter(name): gives the value of a parameter with the given name

Execution attributes:

- annotation(key): gives the value of an annotation for the given key

- dayOfWeek: gives the day of the week on which the execution occurred.

- time: gives the time the execution occurred.

Queries

1. Find the process that led to Atlas X Graphic / everything that caused Atlas X Graphic to be as it is. This should tell us the new brain images from which the averaged atlas was generated, the warping performed etc. We begin by separating the pieces of this query into the different tiers by noting that by asking about processes without specifying any versioning information, we are interested in all versions. At the workflow level, we wish to find all workflows that include the generation of the 'atlas-x.gif' image. In our model, the creation of this file is done via a FileSink module. Thus, our query will ask for all modules whose type is FileSink and whose name is 'atlas-x.gif'. If we stopped the query at this point, we would retrieve all workflows, partial and complete, that include modules that meet that criteria. However, we interpret this query is specifically asking for only workflows where this file has actually been generated. We can do this by ensuring the module that generates this file was actually executed.wf{*}: upstream(x) union x where x.module = FileSink and x.parameter('name') = 'atlas-x.gif' and executed(x)

Visual Results

2. Find the process that led to Atlas X Graphic, excluding everything prior to the averaging of images with softmean.

Here, we perform a similar query to that of the first query, except that we must exclude all those modules that precede Softmean in the workflow. We use a query that simply subtracts these modules out.

wf{*}: (upstream(x) union x) - upstream(y) where x.module = FileSink and x.parameter('name') = 'atlas-x.gif' and executed(x) and y.module = SoftMean

Visual Results

3. Find the Stage 3, 4 and 5 details of the process that led to Atlas X Graphic.

First, we need to define how VisTrails handles these stage details. Because these are user-defined, we choose to use the annotation feature to add this information to the modules as key-value pairs; the key is "stage" and the values are as listed in the workflow description. Then the query is again, exactly like the first, except that we need to check that the upstream modules have the matching annotations.

wf{*}: upstream(x) union x where x.module = FileSink and x.parameter('name') = 'atlas-x.gif' and x.annotation('stage') in {'3','4','5'} and executed(x)

Visual Results

4. Find all invocations of procedure align_warp using a twelfth order nonlinear 1365 parameter model (see model menu describing possible values of parameter "-m 12" of align_warp) that ran on a Monday.

Notice that here we must use the log to determine when the workflows were run, but we need not examine the log to know which workflows actually match the invocations we are searching for. Recall that our model separates workflow specification from execution so we query the workflow for modules that match the given type and parameters and query the log to make sure we get only those run on a Monday.

wf{*}: x where x.module = AlignWarp and x.parameter('model') = '12' and (log{x}: y where y.dayOfWeek = 'Monday')

Visual Results

5. Find all Atlas Graphic images outputted from workflows where at least one of the input Anatomy Headers had an entry global maximum=4095. The contents of a header file can be extracted as text using the scanheader AIR utility.

Here, we do not know the values of the anatomy headers until execution so we must search the log. We have incorporated a mechanism in the logging functionality of VisTrails so that we can store execution time annotations, thus saving file-header information.

wf{*}: upstream(x) where x.module = FileSink and matches(x.parameter('name'), '.*atlas.*\.gif') and y in upstream(x) and y.module = File and (log{y}: z where z.annotation('globalmaximum') = '4095')

Visual Results

6. Find all output averaged images of softmean (average) procedures, where the warped images taken as input were align_warped using a twelfth order nonlinear 1365 parameter model, i.e. "where softmean was preceded in the workflow, directly or indirectly, by an align_warp procedure with argument -m 12."

This query asks for intermediate data products of a workflow. Since our current implementation does not store intermediate data, we show the user the part of the workflow needed to generate these data products and allow them to add modules to save the intermediate files upon running the workflow.

wf{*}: upstream(x) union x where x.module = SoftMean and executed(x) and y in upstream(x) and y.module = AlignWarp and y.parameter('model') = '12'

Visual Results

7. A user has run the workflow twice, in the second instance replacing each procedures (convert) in the final stage with two procedures: pgmtoppm, then pnmtojpeg. Find the differences between the two workflow runs. The exact level of detail in the difference that is detected by a system is up to each participant.

In this query, all we need to do is do a visual difference between the two workflows. This is a built-in feature of VisTrails, where the module differences are highlighted by color and parameters differences are shown in a table.

Visual Results

8. A user has annotated some anatomy images with a key-value pair center=UChicago. Find the outputs of align_warp where the inputs are annotated with center=UChicago.

Because there is no specification on how images are annotated, we assume that these are module-level annotations on the modules that are input into the AlignWarp module. Again, we choose to show the user the process to generate the intermediate data products, allowing creation or modification of the pipeline to save this data.

wf{*}: upstream(x) union x where x.module = AlignWarp and y in inputs(x) and y.annotation('center') = 'UChicago'

Visual Results

9. A user has annotated some atlas graphics with key-value pair where the key is studyModality. Find all the graphical atlas sets that have metadata annotation studyModality with values speech, visual or audio, and return all other annotations to these files.

Again, we assume that the annotations are stored in the workflow specification. The modules that match are highlighted, and the user can examine the other annotations for that file by clicking on the module and selecting the annotations tab.

wf{*}: x where x.module = File and x.annotation('studyModality') in {'speech', 'visual', 'audio'}

Visual Results

Suggested Workflow Variants

One key feature of the VisTrails system is that it allows the exploration of the parameter space. By using this feature, the workflows shown in Figure 1 and 2 could be condensed by performing the slicing operations once with multiple parameters specified. This would simplify the creation and maintenance process of the workflows.Suggested Queries

An important part of workflow systems is their ability to allow collaboration. The VisTrails system was designed to allow multiple users to create and share workflows. In the system, each version is color-coded based on the user who made the changes to create the version and the date in which the change was made. This allows us to perform queries with respect to the creation process such as "Which workflows were created by Carlos last week." These queries could be performed on each vistrail or over a collection of vistrails stored in a database.Categorisation of queries

We believe that the specification of a workflow should be separate from its instances. Thus, our system tracks the evolution of workflows differently from the executions. By using this separation, we have a natural query structure. There are queries that act on versions, workflows, and/or executions. By categorizing the queries thus, we were easily able to handle the entire range of queries on this challenge.Live systems

The VisTrail system is under development and is currently being prepared for release to the public.Further Comments

This challenge was very beneficial for our team because the variety of queries made us realize we needed to extend our querying mechanism. The result is a more robust system. We look forward to future provenance challenges!Conclusions

The VisTrails system provides the necessary infrastructure to track the provenance of workflow creation, maintenance, and execution. Because of the flexibility of the system, we were able to add the necessary new features and compose the queries for the challenge about two weeks with our small team. A key feature of our system that separates it from others is that it provides a way to visually analyze the results of queries in the same framework that the workflows are created and explored. This results a powerful tool for facilitating scientific exploration and reducing the time to insight. In the future, we would like to incorporate our query language into the interface so that these types of queries can be easily composed by a user. We also plan on finishing our prototype and releasing the system to the general public.to top

| I | Attachment  | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| | provenance.xml | manage | 95.1 K | 12 Sep 2006 - 23:45 | EmanueleSantos | Provenance Challenge vistrails file |

Edit | Attach image or document | Printable version | Raw text | More topic actions

Revisions: | r1.11 | > | r1.10 | > | r1.9 | Total page history | Backlinks

Revisions: | r1.11 | > | r1.10 | > | r1.9 | Total page history | Backlinks