Challenge

Start of topic | Skip to actions

Provenance Challenge: Karma3, Indiana University

Participating Team

Team and Project Details

- Short team name: Karma

- Participant names: Girish Subramanian, Bin Cao, Beth Plale

- Project URL: http://www.dataandsearch.org/provenance/karma

- Project Overview: Karma is a provenance collection and management system developed at Indiana University. It captures both process provenance and data provenance in user-driven workflow systems. Karma is not tightly couple to a particular workflow system and it views domain science interaction with cyberinfrastructure as driven either by a user-directed workflow (no orchestration tool in use) or by a workflow orchestration system. Karma provenance collection is implemented through modular instrumentation, which makes it useable in diverse workflow architectures. Karma stores provenance data in a two-layer information model which is capable of representing both execution information and higher level process details for the purpose of long-term preservation.

- Relevant Publications: http://www.dataandsearch.org/provenance/publications

Workflow Representation

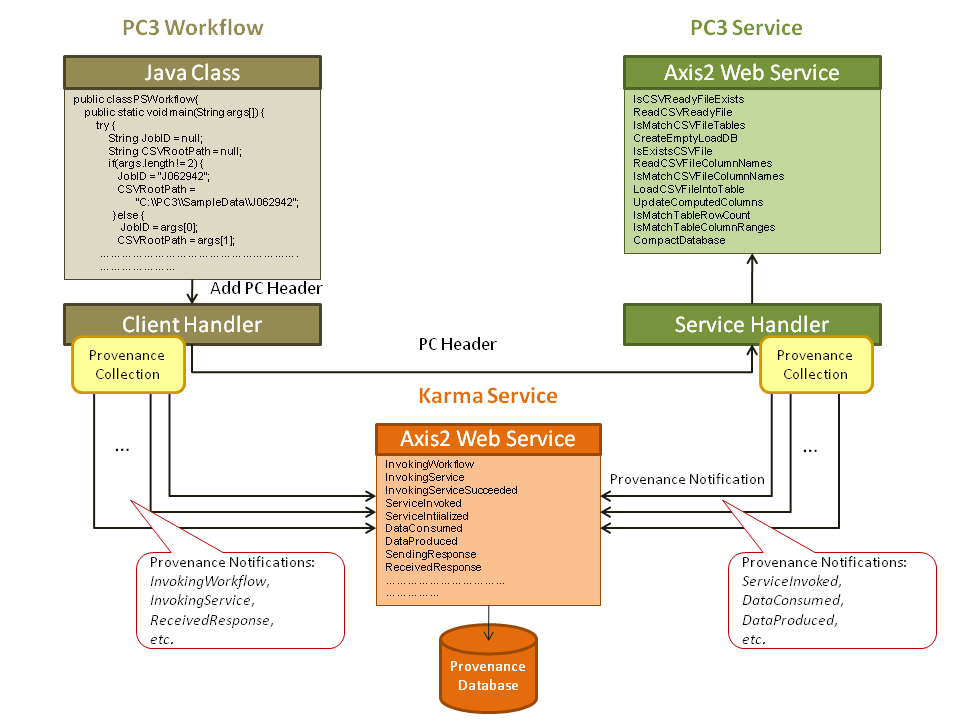

As described in the project overview, Karma is not tightly coupled to a particular workflow system and provenance collection is implemented through modular instrumentation, which give Karma the flexibility to capture provenance from different sources and diverse workflow architectures. Unlike all our previous applications, where Karma was implemented as a messenger bus listener, provenance data were synchronously ingested through Karma web service in this project. We simulate the workflow execution using the client-service scenario, where a client (which could be a workflow engine, e.g., ODE) schedules and invokes each service. The following figure shows how we set up the Third Provenance Challenge (PC3). There are three components and all of them are built upon the Axis2 web service.

- Karma Service.

Karma service defines the provenance web service. It supports the following methods for PC3 Workflow and PC3 Service to handle provenance data. These methods are similar to provenance notifications used in the messenger bus setting and can be roughly classified into three categories:

Client side: InvokingWorkflow?/InvokingWorkflowSucceeded/InvokingWorkflowFailed, InvokingService/InvokingServiceSucceeded/InvokingServiceFailed, ReceivedResponse?/ReceivedFault, WorkflowInitialized?/WorkflowTerminated, ServiceInitialized?/ServiceTerminated, WorkflowFailed?/SendingFault.

Service side: WorkflowInvoked?/ServiceInvoked, SendingResponse?/SendingResponseSucceeded/SendingResponseFailed.

Data: DataConsumed?/DataProduced, DataReceivedStarted?/DataReceivedFinished, DataSendStarted?/DataSendFinished.

Each method retrieves the parameters from the "notification" and stores the data into the provenance database. It also supports exporting the result as OPM graphs.

- PC3 Service PC3 Service provides the web service for PC3, which include all methods used in PC3 workflow. Provenance data is collected and sent to Karma Service by Provenance Handler by the means of calling the service side and data side methods defined in Karma Service.

- PC3 Workflow PC3 Workflow uses the methods defined in PC3 Service to simulate the execution of the PC3 workflow. Provenance data is collected and sent to Karma Service by Provenance Client Handler by the means of calling the client side methods defined in Karma Service.

Open Provenance Model Output

OPM defines three entities which can be represented in Karma as follows:- Artifact: data product (data granule and data collection)

- Process: entity (could be a workflow (composite service), service, or method)

- Agent: client (could be a user or a workflow engine that initiates the "workflow")

- Type 1: process used artifact

- Type 2: artifact was generated by a process

- Type 3: process was triggered by another process

- Type 4: artifact was derived from another artifact

- Type 5: process was controlled by agent

- Method invocation has input data granule/collection (Type 1)

- Data granule/collection Inverse(has output) method invocation (Type 2)

- Abstract service has next method another abstract service (Type 3)

- Composite service has sub method another abstract service (Type 3)

- Data granule 2 inverse(has output) method invocation

data granule 1 (Type 4) - Service Instance Inverse(invokes Client (Type 5)

- artifact: from the data_product table.

- process: from the entity table.

- used: from the data_consumed table.

- wasGeneratedFrom: from the data_produced table.

- wasTriggeredBy: in our implementation, ODE schedules the workflow execution; thus, there are no direct relationships between services. In other words, a service does not know which service was invoked previously or will be invoked next. The only relationship that can link two services is the data dependency, which can be obtained by joining the data_consumed table and the data_produced table for the tuples with the same data product ids.

- wasDerivedFrom: similar to wasTriggeredBy, wasDerivedFrom can be obtained by joining the data_consumed table and the data_produced table for the tuples with the same entity id.

| Job ID | OPM Output |

|---|---|

| J062941 | J062941-opm.xml |

| J062942 | J062942-opm.xml |

| J062943 | J062943-opm.xml |

| J062944 | J062944-opm.xml |

| J062945 | J062945-opm.xml |

Query Results

Core Queries

Query 1

For a given detection, which CSV files contributed to it?Since Karma does not instrument database provenance, we query the detection id from the P2Detection table after the LoadCSVFileIntoTable? execution and sends the data as a data product produced by LoadCSVFileIntoTable?. Data product is stored in an XML format and can be retrieved by XPath query.

select distinct extractvalue(desc_and_annotation, '//ns2:FileEntry/ns1:FilePath')

from data_consumed

where entity_id = (select entity_id

from data_produced

where extractvalue(desc_and_annotation, '/detectionIDs/detectID=261887481030000003'));

Result:

C:\PC3\SampleData\J062942\P2_J062942_B001_P2fits0_20081115_P2Detection.csv

Query 2

The user considers a table to contain values they do not expect. Was the range check (IsMatchTableColumnRanges?) performed for this table?We answer this query by checking if the given table was consumed by IsMatchTableColumnRanges?. Assume the table is P2Detection.

select count(*)

from (select c.desc_and_annotation

from data_consumed c, entity e

where c.entity_id = e.entity_id and

e.workflow_node_id = 'IsMatchTableColumnRanges') ce

where extractvalue(ce.desc_and_annotation,'/ns2:IsMatchTableColumnRanges/ns2:FileEntry/ns1:TargetTable')='P2Detection'

Result:

count for table P2Detection: 2 Yes, IsMatchTableColumnRanges was performed on P2Detection.

Query 3

Which operation executions were strictly necessary for the Image table to contain a particular (non-computed) value?We answer this query is three steps. First, find the entity for LoadCSVFileIntoTable? and data (P2ImageMeta) consumed by LoadCSVFileIntoTable?.

select distinct c.entity_id

from data_consumed c, entity e

where extractvalue(c.desc_and_annotation, '//ns1:TargetTable') = 'P2ImageMeta' and

workflow_node_id = 'LoadCSVFileIntoTable' and

c.entity_id = e.entity_id

Second, recursively perform the following query to find all causal dependencies for the entity LoadCSVFileIntoTable?. Note that _entityID_ is a variable.

select entity_id

from data_produced p,

(select data_product_id

from data_consumed

where entity_id = _entityID_) c

where p.data_product_id = c.data_product_id

Finally, we perform a join of the entity table and invocation table to get the iteration information.

select workflow_node_id, extractvalue(desc_and_annotation, '/Iteration') from entity, invocation where invokee_id = _entityID_ and entity_id = invokee_idResult:

Process Iteration LoadCSVFileIntoTable 2 CreateEmptyLoadDB ReadCSVFileColumnNames 2 ReadCSVReadyFile

Optional Queries

Optional Query 1

The workflow halts due to failing an IsMatchTableColumnRanges? check. How many tables successfully loaded before the workflow halted due to a failed check?To simulate the workflow halts due to failing an IsMatchIsMatchTableColumnRanges? check, we modify LoadConstants?.java in the package info.ipaw.pc3.psloadworkflow.logic of PC3Service, and perform the following query to see how many times IsMatchIsMatchTableColumnRanges? was invoked.

select count(*)

from invocation,

(select entity_id

from entity

where workflow_node_id = 'IsMatchTableColumnRanges') e

where invokee_id = e.entity_id

Result:

3Since IsMatchTableColumnRanges? was invoked three times and one fails, there are two tables successfully loaded.

Optional Query 3

A CSV or header file is deleted during the workflow's execution. How much time expired between a successful IsMatchCSVFileTables? test (when the file existed) and an unsuccessful IsExistsCSVFile?? test (when the file had been deleted)?To answer this query, we delete the file "P2ImageMeta.csv.hdr" during the workflow execution.

select timediff(t1, t2)

from (select production_time as t1

from data_produced

where extractvalue(desc_and_annotation, '/ns2:IsExistsCSVFileResponse/ns2:return')='false') p1,

(select production_time as t2

from data_produced

where extractvalue(desc_and_annotation, '/ns2:IsMatchCSVFileTablesResponse/ns2:return')='true') p2

Result:

00:00:07

Optional Query 5

A user executes the workflow many times (say 5 times) over different sets of data (j062941, j062942, ... j062945). He wants to determine, which of the execution halted?To answer this query, we delete the file "P2ImageMeta.csv" for the job j062943.

select extractvalue(desc_and_annotation, '//JobID') as JobID,

extractvalue(desc_and_annotation, '//CVSRootPath') as CSVRootPath

from invocation

where invokee_id = (select entity_id

from entity

where instance = (select e.instance

from data_produced p, entity e

where extractvalue(p.desc_and_annotation, '//ns2:return')='false' and

p.entity_id = e.entity_id) and

workflow_id = 'http://localhost:8080/workflow/pc3' and

service_id = 'http://www.dataandsearch.org/service/null')

Result:

JobID CSVRootPath J062943 C:\PC3\SampleData\J062943

Optional Query 6

Determine the step where halt occured?Similar to Optional Query 1, we simulate halt due to failing an IsMatchTableColumnRanges? check.

select workflow_node_id, extractvalue(i.desc_and_annotation, '/Iteration') as iteration

from data_produced p, entity e, invocation i

where extractvalue(p.desc_and_annotation, '//ns2:return')='false' and

p.entity_id = e.entity_id and e.entity_id = i.invokee_id

Result:

workflow_node_id iteration IsMatchTableColumnRanges 3

Optional Query 8

Which steps were completed successfully before the halt occurred?Following Optional Query 6,

select workflow_node_id, extractvalue(desc_and_annotation, '/Iteration') as iteration

from entity, invocation

where entity_id = invokee_id and

invocation_id < (select invocation_id

from data_produced p, invocation

where extractvalue(p.desc_and_annotation, '//ns2:return')='false' and

entity_id = invokee_id)

Result:

workflow_node_id Iteration IsCSVReadyFileExists ReadCSVReadyFile IsMatchCSVFileTables CreateEmptyLoadDB IsExistsCSVFile 1 ReadCSVFileColumnNames 1 IsMatchCSVFileColumnNames 1 LoadCSVFileIntoTable 1 UpdateComputedColumns 1 IsMatchTableRowCount 1 IsMatchTableColumnRanges 1 IsExistsCSVFile 2 ReadCSVFileColumnNames 2 IsMatchCSVFileColumnNames 2 LoadCSVFileIntoTable 2 UpdateComputedColumns 2 IsMatchTableRowCount 2 IsMatchTableColumnRanges 2 IsExistsCSVFile 3 ReadCSVFileColumnNames 3 IsMatchCSVFileColumnNames 3 LoadCSVFileIntoTable 3 UpdateComputedColumns 3 IsMatchTableRowCount 3

Optional Query 10

For a workflow execution, determine the user inputs?We have two solutions for this question. Solution 1: we find the data product in the the data_consumed table but not in the data_produced table.

select distinct data_consumed.data_product_id frpm data_consumed left join data_produced on data_consumed.data_product_id = data_produced.data_product_id where data_produced.data_product_id IS NULL;Result:

www.dataandsearch.org/data/CSVRootPath/c5a14830-7cb8-41ec-a949-c50ece4987de www.dataandsearch.org/data/JobID/c5a14830-7cb8-41ec-a949-c50ece4987deSolution 2: we send the user input along with the "InvokingWorkflow" notification, Thus, we directly perform join of

inovation and =invocation_status_ to get the data product which contains user input.

select i.desc_and_annotation from invocation i, invocation_status where i.invocation_id = invocation_status.invocation_id and invocation_status = 'InvokingWorkflow' into @xml; select extractvalue(@xml, '//JobID'); select extractvalue(@xml, '//CVSRootPath');Result:

JobID: J062942 CVSRootPath: C:\PC3\SampleData\J062942

Optional Query 11

For a workflow execution, determine steps that required user inputs?Following Optional Query 11, get the methods for the user input "CSVRootPath".

select workflow_node_id

from entity

where entity_id in (select entity_id

from data_consumed

where data_product_id = 'www.dataandsearch.org/data/CSVRootPath/c5a14830-7cb8-41ec-a949-c50ece4987de')

Result:

IsCSVReadyFileExists ReadCSVReadyFileNext, find the user input for JobID?.

select workflow_node_id

from entity

where entity_id in (select entity_id

from data_consumed

where data_product_id = 'www.dataandsearch.org/data/JobID/c5a14830-7cb8-41ec-a949-c50ece4987de')

Result:

CreateEmptyLoadDB

Optional Query 12

To answer this query, we delete the file P2ImageMeta.csv.hdr.

select distinct extractvalue(c.desc_and_annotation, '//ns2:FileEntry/ns1:TargetTable')

from data_consumed c,

(select entity_id

from data_produced

where extractvalue(desc_and_annotation, '/ns2:IsMatchTableColumnRangesResponse/ns2:return')='true') p

where c.entity_id = p.entity_id

Result:

P2FrameMeta

Optional Query 13

Step dependency: same as the OPM wasTriggeredByselect p.entity_id as producer, c.entity_id as consumer from data_consumed c, data_produced p where c.data_product_id = p.data_product_idData dependency: same as the OPM wasDerivedFrom

select c.entity_id, p.data_product_id as output, c.data_product_id as input from data_consumed c, data_produced p where c.entity_id = p.entity_idSee OPM output for results.

Suggested Workflow Variants

Suggested Queries

Suggestions for Modification of the Open Provenance Model

Conclusions

-- BinCao - 06 Apr 2009to top

| I | Attachment  | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| | J062941-opm.xml | manage | 99.6 K | 08 Jun 2009 - 06:09 | BinCao | J062941 OPM |

| | J062942-opm.xml | manage | 102.7 K | 08 Jun 2009 - 06:11 | BinCao | J062942 OPM |

| | J062943-opm.xml | manage | 137.0 K | 08 Jun 2009 - 06:11 | BinCao | J062943 OPM |

| | J062944-opm.xml | manage | 97.3 K | 08 Jun 2009 - 06:12 | BinCao | J062944 OPM |

| | J062945-opm.xml | manage | 97.3 K | 08 Jun 2009 - 06:13 | BinCao | J062945 OPM |

| | PC3Architecture.png | manage | 39.8 K | 10 Jun 2009 - 04:54 | BinCao | PC3 workflow setup diagram |

Edit | Attach image or document | Printable version | Raw text | More topic actions

Revisions: | r1.14 | > | r1.13 | > | r1.12 | Total page history | Backlinks

Revisions: | r1.14 | > | r1.13 | > | r1.12 | Total page history | Backlinks

Challenge.Karma3 moved from Challenge.KarmaIndianaUniversity on 06 Apr 2009 - 15:06 by BinCao - put it back